Businesses and organizations generate vast amounts of unstructured data every day. This data often contains valuable insights that can inform future business decisions, improve efficiency, and drive innovation. However, much of this data remains untapped due to concerns surrounding privacy and data security. Organizations are reluctant to utilize or share historical data because it often contains sensitive or personal information, which, if mishandled, could lead to legal and reputational risks.

This is where Styrk’s Cypher, a solution to identify and mask sensitive data from unstructured data sources (such as PDFs, Word documents, text files, and even images), steps in. Cypher ensures that organizations can safely reuse historical data without compromising privacy or security.

The challenge: Valuable data trapped by privacy concerns

For years, organizations have amassed huge volumes of unstructured data, including legal contracts, customer communications, medical records, financial reports, and more. Often, these documents contain personally identifiable information (PII), financial data, or other sensitive content that is subject to strict data privacy regulations.

Because of these privacy concerns, historical data is often shelved or deleted to avoid compliance issues. Organizations face significant obstacles when it comes to extracting the valuable insights locked away in this data, especially without compromising privacy or inadvertently exposing sensitive information.

Take the example of a healthcare provider organization wanting to conduct a study on past patient outcomes. The organization possesses decades of medical records, filled with valuable data, but it cannot reuse or share them without risking the exposure of patient identities and medical information. Manually anonymizing large datasets is time-consuming, prone to human error, and requires significant expertise in data security.

The solution: Cypher for historical data reuse

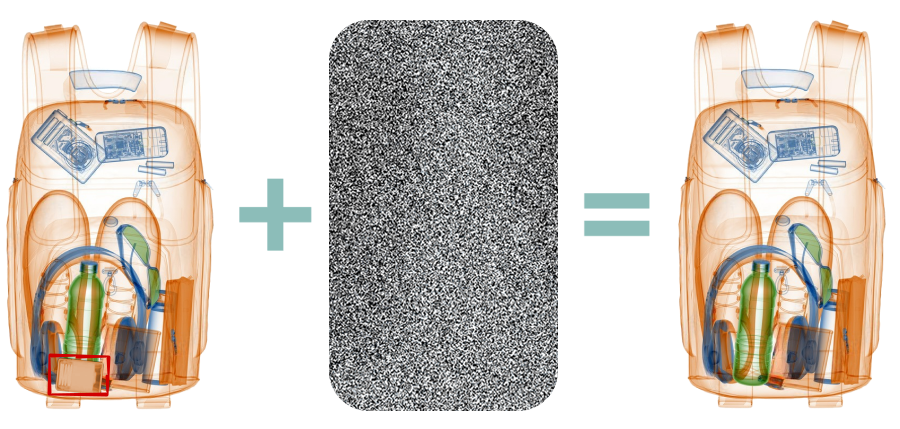

Cypher offers a powerful solution to this dilemma by enabling organizations to safely reuse historical unstructured data. By identifying and masking sensitive information automatically, Cypher helps organizations maintain compliance with privacy regulations while leveraging the information contained in their historical data.

Cypher’s advanced algorithms can process and analyze a wide range of unstructured file types—be they text-heavy PDFs, word documents, or scanned image files. By recognizing patterns associated with sensitive data (like names, addresses, Social Security numbers, or credit card information), Cypher can accurately detect and mask such information across large datasets. This process allows organizations to reuse their historical data with full confidence that no sensitive data will be inadvertently disclosed.

Key benefits of Cypher in historical data reuse

Unlocking hidden value:

With Cypher’s masking technology, organizations can safely access historical data that was previously off-limits due to privacy concerns. Whether it’s decades-old contracts, customer feedback, or archived financial data, these documents contain rich information that can be used for trend analysis, decision-making, and forecasting.

Automated detection and masking:

The solution eliminates the need for manual review by leveraging AI to automate the detection of sensitive data. Cypher scans unstructured data at scale, identifying PII and other confidential information that must be masked, drastically reducing the time and effort required to prepare data for reuse.

Preservation of data integrity:

While Cypher effectively masks sensitive information, it maintains the structure and integrity of the underlying data. This ensures that historical data remains valuable for analysis, research, and reporting purposes, even after sensitive elements have been removed.

Real-world example: A financial institution’s data dilemma

Consider a financial institution that has been operational for over 50 years. The company possesses an enormous archive of customer transaction records, loan agreements, and financial reports stored as unstructured data. These documents contain vast amounts of business intelligence that could offer insights into market trends, customer behavior, and operational improvements.

However, many of these files contain sensitive information such as account numbers, personal addresses, and financial details that must be protected. Historically, the institution has been unable to fully leverage this data for fear of violating privacy laws and exposing customers’ personal information. By implementing Cypher, the financial institution can securely process these files. Cypher scans the archive, identifies sensitive data, and applies masking techniques to anonymize it. The institution can then reuse its historical data to conduct deep-dive analysis, predictive modeling, and market research—all without risking compliance violations or customer trust.

Historical data reuse in a privacy-conscious world

As organizations seek to derive more value from their data, the ability to safely reuse historical information is becoming a critical competitive advantage. Privacy makes it possible for companies to unlock the full potential of their unstructured data while ensuring that sensitive information is fully protected.

With Cypher’s automated detection and masking capabilities, businesses across industries—from healthcare and finance to legal and government—can confidently reuse their historical data, gaining new insights and making more informed decisions, all while staying compliant with ever-evolving privacy regulations.

In an era where data is the lifeblood of business strategy, Cypher provides the key to unlocking the value of historical data without sacrificing privacy and security. By ensuring that sensitive information is identified and protected, Cypher empowers organizations to confidently reuse their data for innovation and growth.