The recent AI FedLab event convened a cross-section of federal leaders, technologists, and researchers for a day of deep discussion on artificial intelligence’s growing role in government. The event’s roundtables and networking sessions surfaced not only the promise of AI for national security, infrastructure, and innovation, but also the practical challenges of responsible adoption. As agencies look to scale AI, several themes and strategies emerged—offering a roadmap for the next phase of federal AI integration.

Pillar 1: Embracing a “One Government” Approach

A central theme was the federal government’s shift toward unified technology innovation. The General Services Administration (GSA) is positioning itself as an enabler, streamlining procurement and fostering a “one government” mindset. This approach aims to break down silos, encourage cross-agency collaboration, and ensure access to best-in-class tools.

To realize this vision, agencies should prioritize solutions that are interoperable and avoid vendor lock-in. Flexible architectures and open standards are key, enabling agencies to select, share, and switch between AI models as mission needs evolve. This also means building processes and workflows that are “AI native” from the outset, maximizing efficiency and adaptability.

Pillar 2: Addressing Environmental and Infrastructure Costs

The environmental footprint of generative AI is coming into sharper focus. As highlighted by the GAO, large language models can rapidly consume hardware resources and drive up energy and water usage. The call for transparency—such as disclosing energy and water consumption—reflects a growing need for sustainable AI practices.

Agencies should consider not just the performance of AI models, but also their operational and environmental costs. This may involve evaluating the lifecycle impact of AI deployments, adopting more efficient or specialized models, and implementing monitoring mechanisms to assess ongoing resource consumption.

Pillar 3: The Foundational Role of Data Infrastructure

Data quality and governance were repeatedly cited as the backbone of effective AI. Leaders stressed that without strong, well-understood data, even the best AI models will fall short. The challenge is compounded by the vast amount of underutilized unstructured data—estimated at 90% of agency content.

Unlocking the value of unstructured data requires robust tools for metadata extraction, data cleaning, and sensitive information sanitization. Agencies should invest in solutions that can automate the identification and protection of personal and sensitive data, making it safe for AI consumption. Establishing clear data lineage, provenance, and bias detection practices is also essential for building trust and reproducibility in AI outcomes.

Pillar 4: Championing Open Competition and Interoperability

There was a strong call to move away from “winner takes all” procurement. Open competition and interoperability are seen as vital for ensuring agencies can access the best-fit solutions for their missions, while modernizing legacy systems without disruption.

Adopting modular, standards-based approaches—akin to “Lego blocks”—can help agencies integrate new technologies with existing infrastructure. Contracts should be structured to ensure data ownership remains with the government, and to prevent unauthorized use of government data for model training. This approach not only fosters innovation but also protects agency interests and mission integrity.

Pillar 5: Expanding Use Cases and Scaling Successes

Federal AI use cases are proliferating, from automating grant reviews to monitoring border cameras and optimizing resource allocation. Agencies are now focused on scaling these successes, often starting with small pilots in secure environments before broader rollout.

Scaling AI responsibly requires embedding security, fairness, and privacy into every stage of the AI lifecycle. Agencies should leverage tools that can test models for vulnerabilities and bias, and provide actionable recommendations for mitigation—without requiring full retraining. This enables rapid, safe scaling of AI capabilities across diverse mission areas.

Deep Dives: Trust, Privacy, Risk, and Cybersecurity

Trustworthy AI

Ensuring AI systems are reliable, fair, and secure was a recurring theme. This includes not only technical measures—like data cleanliness, model testing, and auditability—but also human factors, such as clear communication and oversight. Standards for data provenance and transparency in training data are increasingly important, especially as machine-generated data proliferates.

Data Privacy

With evolving mandates and the complexity of data types (PII, PHI, etc.), agencies face challenges in safeguarding sensitive information. Effective strategies include automated data sanitization, robust audit trails, and clear boundaries for data containment. Recognizing that bias cannot be eliminated entirely, agencies should focus on continuous bias detection and mitigation.

Risk Management

AI risk is multifaceted, encompassing data exposure, mission impact, and the risk of inaction. Agencies are encouraged to adopt comprehensive risk management frameworks, quantify risks and benefits, and prioritize use cases that deliver the greatest mission impact. Engaging with vendors on model testing, benchmarking, and transparency is critical.

Cybersecurity

AI expands the attack surface, introducing new vectors for adversarial threats and data poisoning. Agencies should implement proactive testing—such as red teaming of AI models—to identify vulnerabilities before deployment. Distinguishing between tangible (security) and intangible (bias, safety) harms requires different validation approaches and ongoing vigilance.

Moving Forward

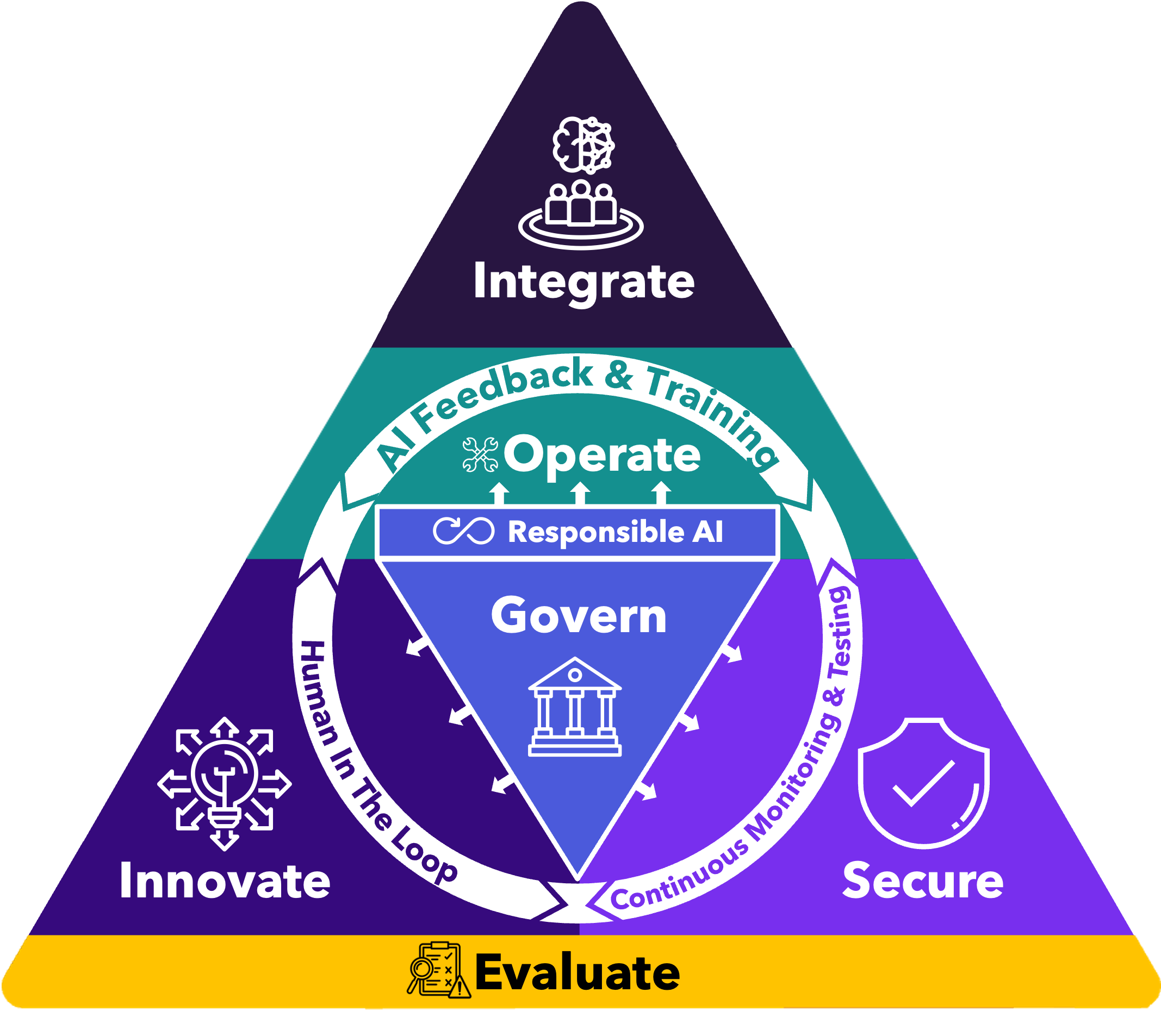

To address the multifaceted challenges highlighted in the AI FedLab’s deep dives on Trust, Privacy, Risk, and Cybersecurity, organizations can leverage comprehensive AI risk management solutions. Using such tools to measure, monitor, and mitigate vulnerabilities will improve resilience against adversarial threats and ensure fairness in model outputs. Additionally, they provide a security, fairness, and privacy layer for GenAI applications, acting as a guardrail to enforce data governance and ethical considerations. By integrating these capabilities, agencies can confidently navigate the complexities of AI adoption, ensuring their systems are trustworthy, secure, and aligned with ethical principles.

Governance, Measurement, and the Path Forward

Effective AI governance is as much about people as it is about technology. Agencies must define clear roles for data stewardship, ensure compliance with evolving regulations, and provide training to empower staff. Measurement and evaluation frameworks are still maturing, but interdisciplinary approaches—combining technical, policy, and mission expertise—are essential.

Piloting new AI capabilities in “safe spaces” allows for rapid experimentation and learning, with the flexibility to scale successes or sunset failures. Agencies should balance in-house development for unique needs with leveraging commercial solutions for efficiency and scalability.

Conclusion: Collaboration and Continuous Learning

AI FedLab underscored the importance of collaboration—across agencies, with industry, and among practitioners. The path forward for federal AI is multifaceted, requiring a blend of robust data practices, responsible risk management, and a commitment to trustworthy, secure, and fair AI. By focusing on these strategies, agencies can unlock the transformative potential of AI while safeguarding mission integrity and public trust.

Exploring comprehensive AI risk management solutions can provide the necessary tools and strategies to confidently deploy and scale AI initiatives, ensuring alignment with mission objectives and public trust. Get started for free to learn how Styrk AI measures, monitors, and mitigates AI/ML risks, ensuring model robustness and resilience against security, privacy, and fairness issues in AI systems.