Introduction

Machine Learning Operations (MLOps) has rapidly evolved to streamline the deployment of AI systems across industries. While organizations focus on model accuracy, scalability, and efficiency, a critical element often remains in the shadows: security and fairness. This blind spot represents a growing vulnerability as adversarial attacks become more sophisticated and bias in AI systems faces increasing scrutiny.

As we deploy ML models that make decisions affecting people’s lives, finances, and safety, we must address these vulnerabilities with the same rigor we apply to traditional cybersecurity. This post explores the often-overlooked challenges of adversarial attacks and bias in MLOps, why they matter, and how organizations can build more resilient and fair ML systems.

The Growing Threat of Adversarial Attacks

Adversarial attacks represent a unique class of threats specifically targeting machine learning models. Unlike traditional cyberattacks focused on network infrastructure or software vulnerabilities, adversarial attacks exploit the fundamental way ML models learn and make decisions.

What Makes These Attacks So Dangerous?

Adversarial attacks can be devastatingly effective while remaining nearly undetectable to human observers. Consider these examples:

- A minor, imperceptible modification to a stop sign image that causes an autonomous vehicle to interpret it as a speed limit sign[^1]

- Subtle noise added to audio commands that humans hear normally but cause voice assistants to execute malicious commands[^2]

- Carefully crafted text inputs that bypass content moderation systems while still conveying harmful content[^3]

These attacks don’t require breaking into systems or exploiting code vulnerabilities—they manipulate the input data in ways that fool the model while appearing normal to humans.

The Persistent Challenge of Bias

While adversarial attacks represent intentional manipulation, bias represents a more insidious threat—often unintentional but equally harmful. ML systems learn patterns from historical data, and when that data contains societal biases, the resulting models perpetuate and sometimes amplify those biases.

Real-World Consequences of Biased Models

Bias in ML systems isn’t merely a theoretical concern; it has tangible impacts:

- Healthcare algorithms that allocate less care to Black patients than white patients with the same level of need[^4]

- Facial recognition systems that perform worse on darker-skinned faces and women[^5]

- Hiring algorithms that favor male candidates over equally qualified female candidates[^6]

Why Security and Fairness Often Go Overlooked

Despite the significant risks, model security and fairness frequently remain afterthoughts in the ML development lifecycle. There are several reasons for this:

1. Misaligned Incentives

Organizations are primarily incentivized to deploy models quickly and maximize performance metrics like accuracy. Security and fairness testing can be seen as bottlenecks that slow down deployment and potentially reduce performance metrics.

2. Lack of Standardized Testing Frameworks

While we have established methodologies for testing traditional software security, frameworks for systematically evaluating ML models against adversarial attacks or bias are still emerging. This makes it difficult for teams to integrate these considerations into their workflows.

3. Complexity and Expertise Gap

Effectively addressing adversarial robustness and bias requires specialized knowledge spanning machine learning, security, ethics, and domain expertise. This interdisciplinary expertise is rare and difficult to develop or acquire.

4. Invisibility of the Problem

Until a system fails catastrophically or faces public scrutiny for biased decisions, the vulnerabilities remain invisible. As one researcher noted, “You don’t know you have a problem until you have a problem.”

The Intersection of Security and Fairness

Interestingly, adversarial attacks and bias are interconnected challenges. Models that are more robust against adversarial attacks also tend to make more fair predictions across different demographic groups[^7]. This suggests that addressing these issues together may yield better results than tackling them separately.

Building More Resilient and Fair ML Systems

Addressing these challenges requires a comprehensive approach that integrates security and fairness throughout the MLOps lifecycle.

1. Expanding the Definition of Model Performance

We need to move beyond accuracy as the primary metric for model success. Performance should include:

- Robustness metrics: How well does the model perform against various adversarial attacks?

- Fairness metrics: Does the model perform consistently across different demographic groups?

- Explainability measures: Can the model’s decisions be understood and verified by humans?

2. Implementing Adversarial Training

One of the most effective defenses against adversarial attacks is to incorporate them into the training process. By exposing models to adversarial examples during training, they become more robust to these attacks during deployment[^8].

# Simplified example of adversarial training

def adversarial_training_step(model, x_batch, y_batch, epsilon):

# Generate adversarial examples

x_adv = generate_adversarial_examples(model, x_batch, y_batch, epsilon)

# Train on mixture of clean and adversarial examples

combined_x = torch.cat([x_batch, x_adv], dim=0)

combined_y = torch.cat([y_batch, y_batch], dim=0)

# Standard training procedure on combined dataset

loss = train_step(model, combined_x, combined_y)

return loss

However, adversarial training can hinder generalization, potentially leading to the model learning non-robust features and becoming less effective on unseen, non-adversarial data. Additionally, it can be computationally expensive and might lead to a model that is overly specialized in defending against specific types of attacks, potentially at the expense of performance on other tasks.

3. Defensive Preprocessing Techniques

In MLSecOps, preprocessing techniques are referred to as input transformation techniques or input preprocessing when used to defend against adversarial attacks. These techniques involve modifying or cleaning the input data before it’s fed into a model to mitigate the impact of adversarial perturbations. Input transformations for adversarial defense offers several key advantages, enhancing the robustness and generalization of machine learning models against adversarial attacks. They can help detect and filter malicious modifications, improve adversarial transferability, and even lead to higher white-box accuracy and black-box accuracy against various attacks.

Preprocessing techniques are generally considered superior to adversarial training for several reasons. Preprocessing focuses on preparing the input data to make it more robust and reliable for model training and prediction, while adversarial training aims to improve a model’s ability to handle adversarial inputs.

In summary, input transformations offer a powerful and versatile approach to mitigating adversarial attacks by enhancing model robustness, improving adversarial transferability, and facilitating effective detection and filtering of malicious modifications

4. Adopting Rigorous Testing Protocols

Security and fairness testing should be as rigorous and standardized as functional testing:

- Red team exercises: Dedicated teams attempting to compromise or bias model outputs

- Automated testing suites: Running models against known adversarial attacks and bias checks

- Continuous monitoring: Watching for degradation in robustness or fairness in production

5. Diversifying Training Data

Models trained on more diverse datasets tend to be both more fair and more robust against attacks. This includes:

- Collecting data from underrepresented groups

- Using data augmentation techniques to increase diversity

- Implementing synthetic data generation to address gaps

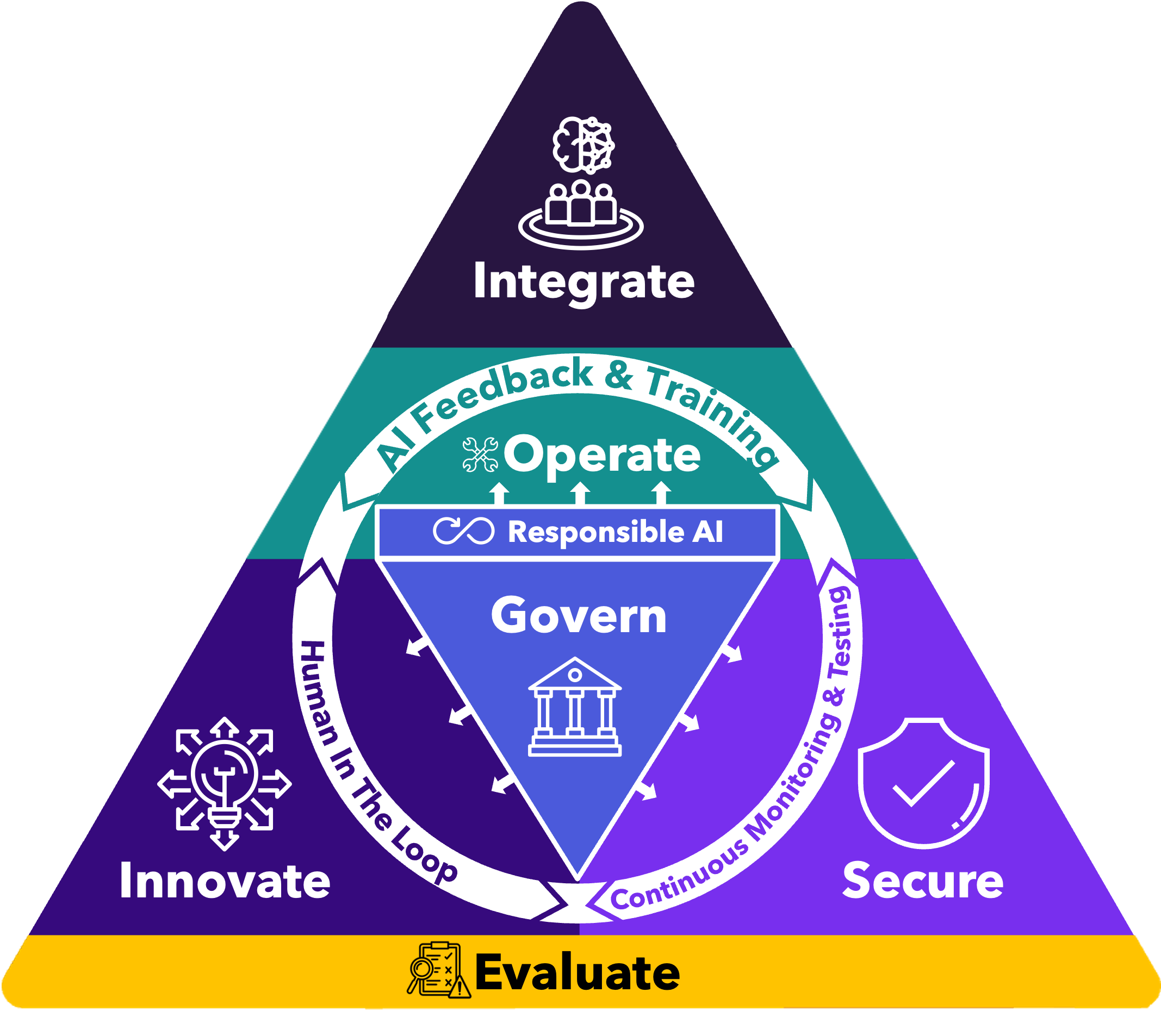

6. Establishing Governance and Accountability

Technical solutions alone aren’t sufficient. Organizations need:

- Clear policies for ML security and fairness

- Defined roles and responsibilities for monitoring and addressing issues

- Documentation requirements for model development and deployment

- Regular audits of deployed models

Case Study: Financial Fraud Detection

Consider a financial institution deploying an ML model to detect fraudulent transactions. A traditional MLOps approach might focus solely on maximizing the model’s ability to identify fraud while minimizing false positives.

An MLSecOps approach would additionally:

- Test the model against adversarial examples where fraudsters slightly modify their behavior to evade detection

- Evaluate whether the model flags transactions from certain demographic groups as “suspicious” at higher rates

- Implement runtime monitoring to detect potential adversarial inputs or emerging bias

- Establish clear procedures for investigating and addressing potential issues

The Path Forward: MLSecOps

The integration of security, fairness, and operational excellence gives rise to MLSecOps—a comprehensive approach that treats security and fairness as first-class citizens in the ML lifecycle.

This approach requires:

- Cultural shift: Moving from “move fast and deploy” to “move thoughtfully and protect”

- Investment in tools: Developing and adopting tools for adversarial testing and fairness evaluation

- Process integration: Building security and fairness checks into CI/CD pipelines

- Skills development: Training teams on security threats and fairness considerations

Conclusion

As ML systems become more deeply integrated into critical infrastructure and decision-making processes, the stakes for getting security and fairness right continue to rise. Organizations that treat these considerations as afterthoughts risk not only reputational damage but potentially catastrophic failures.

By expanding our view of MLOps to incorporate security and fairness from the start—embracing MLSecOps—we can build AI systems that are not only powerful and efficient but also resilient and just. The challenge is significant, but the alternative—deploying vulnerable and biased systems at scale—is far more costly in the long run.

The time to address the blind spot in MLOps is now, before the next generation of ML systems is deployed.

References

[^1]: Eykholt, K., Evtimov, I., Fernandes, E., Li, B., Rahmati, A., Xiao, C., … & Song, D. (2018). Robust physical-world attacks on deep learning visual classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 1625-1634).

[^2]: Carlini, N., Mishra, P., Vaidya, T., Zhang, Y., Sherr, M., Shields, C., … & Zhou, W. (2016). Hidden voice commands. In 25th USENIX Security Symposium (USENIX Security 16) (pp. 513-530).

[^3]: Ebrahimi, J., Rao, A., Lowd, D., & Dou, D. (2018). HotFlip: White-box adversarial examples for text classification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (pp. 31-36).

[^4]: Obermeyer, Z., Powers, B., Vogeli, C., & Mullainathan, S. (2019). Dissecting racial bias in an algorithm used to manage the health of populations. Science, 366(6464), 447-453.

[^5]: Buolamwini, J., & Gebru, T. (2018). Gender shades: Intersectional accuracy disparities in commercial gender classification. In Conference on fairness, accountability and transparency (pp. 77-91).

[^6]: Dastin, J. (2018). Amazon scraps secret AI recruiting tool that showed bias against women. Reuters.

[^7]: Xu, H., Ma, Y., Liu, H. C., Deb, D., Liu, H., Tang, J. L., & Jain, A. K. (2020). Adversarial attacks and defenses in images, graphs and text: A review. International Journal of Automation and Computing, 17(2), 151-178.

[^8]: Madry, A., Makelov, A., Schmidt, L., Tsipras, D., & Vladu, A. (2018). Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083.